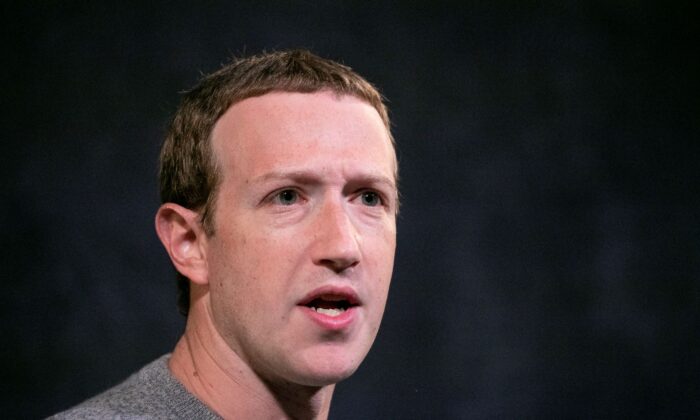

Meta Discontinues Facebook Fact-Checking Program: Key Takeaways

This change marks the conclusion of a prolonged shift towards greater moderation.

Here’s what to understand.

‘Perceived Political Bias’

Meta implemented the fact-checking program in 2016 following Donald Trump’s victory in that year’s presidential election.

The organizations involved in the initiative, such as Snopes, had the ability to scrutinize posts. If they determined a post contained false information, it would either be flagged with an accompanying fact-check note or removed altogether.

“That’s not how things unfolded, particularly in the U.S.,” he noted. “Experts, like everyone else, possess their own biases and viewpoints. This was evident in the selections made regarding what to fact-check and how. Over time, we found too much content being fact-checked that should have been considered legitimate political expression and debate. Our system then imposed real consequences through intrusive labels and decreased exposure. A program designed to educate frequently became a tool for censorship.”

Transitioning to an X Model

In recent years, Meta has been emulating various features of X, previously known as Twitter. Meta’s Threads, initially launched as a video messaging app, was later modified to function as an X-style platform for concise thoughts. Zuckerberg also introduced a premium version following Elon Musk’s rollout of Twitter Blue.

Facebook’s latest action is to replace the fact-checking program with an X-style community notes feature driven by users.

“We’re eliminating fact-checkers and substituting them with community notes, akin to X,” Zuckerberg stated.

Kaplan asserted that X’s model has proven successful.

“They empower their community to discern when posts may be misleading and require additional context, allowing individuals from diverse perspectives to determine what context is beneficial for others to view,” he explained. “We believe this approach could better accomplish our original goal of informing users about what they encounter—and is less susceptible to bias.”

Requesting Notes

Community Notes on X relies on contributors who evaluate specific posts they believe contain misleading information.

Even some of Musk’s posts have attracted Community Notes.

X explicitly reveals that ordinary individuals are valuable contributors, rather than relying solely on professionals.

Zuckerberg mentioned in 2016, prior to the fact-checking program’s inception, that Facebook traditionally depended on users to help the company identify what was genuine and what was not, but the issue had grown so complex that collaboration with fact-checking organizations was deemed necessary.

Executives are now reverting to users, emphasizing that the notes will depend on contributions from users. Furthermore, Meta’s program will not dictate which notes are displayed, according to Kaplan. The notes achieving consensus “among individuals with varying perspectives” will be made public.

Responses—Mixed Reactions

Some criticized the new direction.

Conversely, Musk wasn’t the only one to laud the changes.

“The First Amendment protects social media companies’ editorial choices regarding the content on their platforms. Still, it’s commendable when platforms voluntarily attempt to minimize bias and unpredictability in their content hosting decisions—especially when they assure users of a free speech culture like Meta does,” stated Ari Cohn, lead counsel for tech policy for the free speech group FIRE, in an email to The Epoch Times.