Think Tank Urges UK to Implement AI Incident Reporting System

The report issued a warning to the Department for Science, Innovation, and Technology, urging immediate action to prevent potential ‘widespread harm’ in the future.

A report from The Centre for Long-Term Resilience (CLTR) on Wednesday proposed that the British government implement a system for documenting the misuse and malfunctions of artificial intelligence (AI). This system aims to mitigate future problems and risks associated with AI.

In a rapidly advancing technological landscape, failure to implement this system could leave the UK unprepared for significant incidents, potentially causing harm to society.

According to the report, over 10,000 safety incidents involving active AI systems have been reported since 2014. This data was compiled by the Organisation for Economic Cooperation and Development (OECD).

A Critical Gap

The report identified a “critical gap” in the UK’s AI regulation that could lead to “widespread harm” if not properly addressed.

CLTR recommended that the UK’s Department for Science, Innovation, and Technology (DSIT) needs better visibility on potential AI incidents within the government. For instance, a faulty AI system used by the Dutch tax authorities caused financial distress for thousands of families due to benefits fraud detection errors.

The think tank also raised concerns about the lack of awareness regarding disinformation campaigns and possible biological weapon development, emphasizing the need for urgent actions to protect UK citizens.

The report highlighted that the DSIT lacks a comprehensive view of emerging incidents, suggesting a proactive approach to identifying novel harms associated with frontier AI.

‘Encouraging Steps’

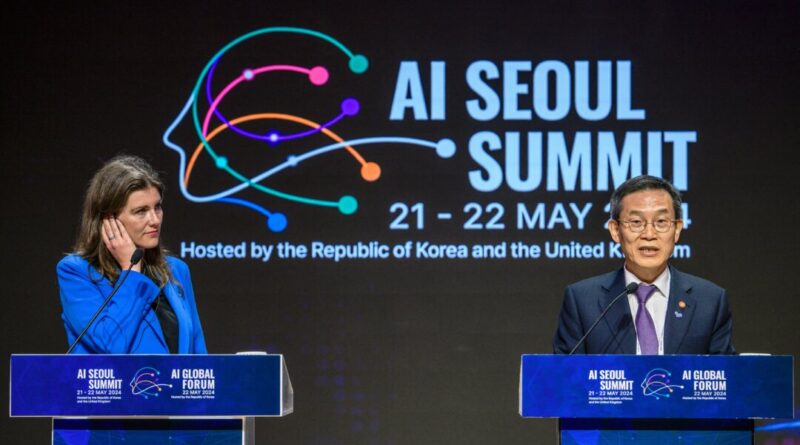

As the UK approaches the upcoming polls on July 4, discussions around AI are gaining prominence. Additionally, on May 21, several countries, including the UK, US, and EU, reached an agreement to enhance AI safety at the AI Seoul Summit.

The Epoch Times has reached out to the DSIT for comments.