Warning: Study Shows AI-Generated Deepfakes Poses Threat to Electoral Integrity

The Security research centre pointed out that there is a lack of clear guidance on preventing the misuse of AI to create deceptive content.

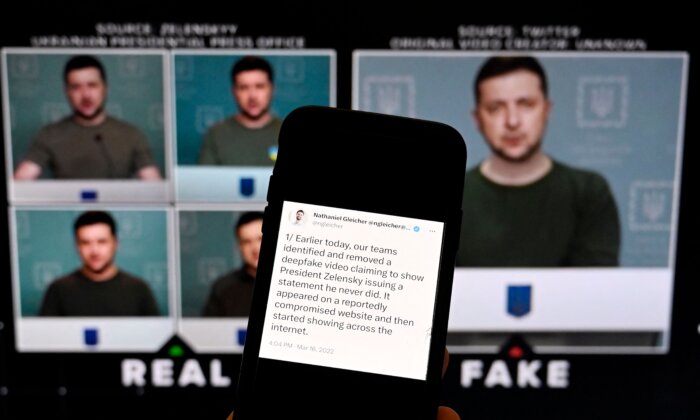

A study warned that highly realistic AI-generated deepfakes with false allegations about political candidates could potentially increase “electoral interference.”

Misleading AI content targeting politicians like Prime Minister Rishi Sunak, Labour leader Sir Keir Starmer, and Mayor of London Sadiq Khan has been a cause for concern.

AI-Generated Voice Clones

CETaS, known for interdisciplinary research on emerging technology and national security policy, emphasized the limited time available before the upcoming general election on July 4 to make significant changes to election security protections or electoral laws.

Despite this, the institute believes that steps can still be taken to enhance short-term resilience against AI-based election threats.

AI-generated voice clones can fabricate false images or videos of political candidates making controversial statements or engaging in contentious activities.

In some instances, they might simulate a candidate withdrawing from the election or endorsing opponents, or even showcase ballot rigging.

The study pointed out that some of these threats may come from hostile actors, while others may originate from the political parties themselves.

It also raised concerns about the potential use of “customised AI malware” to manipulate voting systems or misreport votes, although the UK is considered to be relatively protected due to the continued use of paper-based voting and manual counting.

However, the study found no clear evidence of AI-generated deepfakes impacting election outcomes after analyzing elections in Argentina, Indonesia, Poland, Slovakia, and Taiwan where candidates predicted to win based on polling data still emerged victorious.

Act Quickly

Despite this, CETaS called for increased regulation and emphasized the lack of clear guidance in this area.

It recommended that the Electoral Commission collaborate with Ofcom and the Independent Press Standards Organisation to publish new guidelines for media reporting on AI-generated content in elections.

Furthermore, it proposed requesting voluntary agreements from political parties outlining the proper use of AI in campaigning and mandating clear labeling of AI-generated election material.

Sam Stockwell, a research associate at The Alan Turing Institute and the lead author of the study, stressed the urgency for regulators to act swiftly to prevent the misuse of AI in spreading false or misleading electoral information.

Alexander Babuta, director of CETaS, emphasized the importance of taking proactive measures to ensure elections are resilient against potential threats without undermining public trust in the democratic process.

Ransomware

The National Cyber Security Centre (NCSC) highlighted the growing threat of ransomware and extortion attacks with their shape-shifting nature and international rise.

It cautioned that actors based in Russia and Iran have been engaging in spear-phishing campaigns targeting politicians, journalists, activists, and other groups to steal sensitive information.

In addition, the NCSC reported that a Chinese state-affiliated group conducted online reconnaissance activities against UK parliamentarians critical of China, although no parliamentary accounts were compromised successfully.

PA Media contributed to this report.